Most people have better things to do on a Saturday night after the kids are asleep. Well, this is my idea of a fun evening… Signing up for the guided project in predicting diabetes by using random forests. Here we go…

Course Objectives

In this course, we are going to focus on four learning objectives:

Complete a random Training and Test set from one Data source using both an R function and using Base R.

Practice exploratory data analysis using ggplot2

Apply a Linear Model, GBM, Random Forest model to a data set.

Examine the results using RMSE and a Confusion Matrix.

By the end of this course, you will be able to apply and examine your own Machine Learning algorithms.

Course Structure

This course is divided into 3 parts:

Course Overview: This introductory reading material.

Machine Learning: Predict Diabetes using Pima Indians Data Set: This is the hands on project that we will work on in Rhyme.

Graded Quiz: This is the final assignment that you need to pass in order to finish the course successfully.

Project Structure

The hands on project on Predict Diabetes using Pima Indians Data Set: is divided into following tasks:

Task 1: In this task the Learner will be introduced to the Course Objectives, which is to how to execute a Random Forest Model using R and the Pima Indians Data set. There will be a short discussion about the Interface and an Instructor Bio.

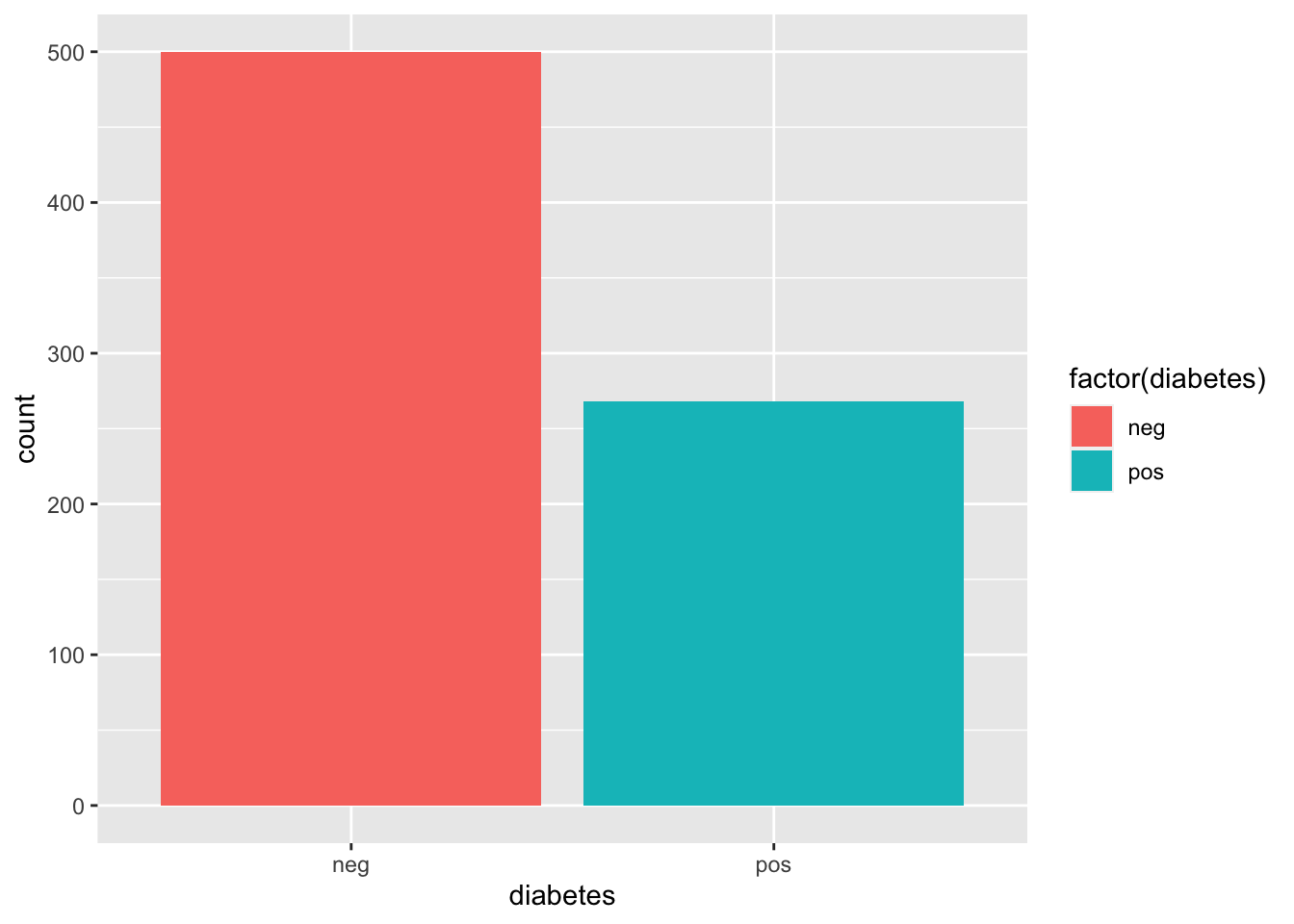

Task 2: The Learners will get practice doing Exploratory Analysis using ggplot2. This is important in order for the practitioner to see the balance of the data, especially as it relates to the Response Variable.

Task 3: The Learner will get experience creating Testing and Training Data Sets. There are multiple ways to do this in R. The Instructor will show the Learner how to do it using the Base R way and also using a function from the caret package.

Task 4: The Learner will get experience with the syntax of the Caret, an R package. Then the Learner will create a Random Forest Model in one function call.

Task 5: The Learner will get practice looking and comparing multiple models using RMSE among other metrics.

Meet the Instructor

My name is Chris Shockley. I use R every day for work. I like building quick machine learning models to answer questions. For fun I like Astronomy and working out. And of course teaching. I look forward to helping you by providing some fun projects that will assist you in your R journey.

library(neuralnet)

library(caret)## Loading required package: ggplot2## Loading required package: latticelibrary(tidyverse)## ── Attaching packages ─────────────────────────────────────── tidyverse 1.3.1 ──## ✔ tibble 3.1.7 ✔ dplyr 1.0.9

## ✔ tidyr 1.2.0 ✔ stringr 1.4.0

## ✔ readr 2.1.2 ✔ forcats 0.5.1

## ✔ purrr 0.3.4## ── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

## ✖ dplyr::compute() masks neuralnet::compute()

## ✖ dplyr::filter() masks stats::filter()

## ✖ dplyr::lag() masks stats::lag()

## ✖ purrr::lift() masks caret::lift()library(mlbench)

library(e1071)data("PimaIndiansDiabetes")

df <- PimaIndiansDiabetes

str(df)## 'data.frame': 768 obs. of 9 variables:

## $ pregnant: num 6 1 8 1 0 5 3 10 2 8 ...

## $ glucose : num 148 85 183 89 137 116 78 115 197 125 ...

## $ pressure: num 72 66 64 66 40 74 50 0 70 96 ...

## $ triceps : num 35 29 0 23 35 0 32 0 45 0 ...

## $ insulin : num 0 0 0 94 168 0 88 0 543 0 ...

## $ mass : num 33.6 26.6 23.3 28.1 43.1 25.6 31 35.3 30.5 0 ...

## $ pedigree: num 0.627 0.351 0.672 0.167 2.288 ...

## $ age : num 50 31 32 21 33 30 26 29 53 54 ...

## $ diabetes: Factor w/ 2 levels "neg","pos": 2 1 2 1 2 1 2 1 2 2 ...?PimaIndiansDiabetes

ggplot(df,aes(diabetes, fill = factor(diabetes))) + geom_bar() create binary variable

create binary variable

df$binary <- ifelse(df$diabetes == "neg", 0, 1)

str(df)## 'data.frame': 768 obs. of 10 variables:

## $ pregnant: num 6 1 8 1 0 5 3 10 2 8 ...

## $ glucose : num 148 85 183 89 137 116 78 115 197 125 ...

## $ pressure: num 72 66 64 66 40 74 50 0 70 96 ...

## $ triceps : num 35 29 0 23 35 0 32 0 45 0 ...

## $ insulin : num 0 0 0 94 168 0 88 0 543 0 ...

## $ mass : num 33.6 26.6 23.3 28.1 43.1 25.6 31 35.3 30.5 0 ...

## $ pedigree: num 0.627 0.351 0.672 0.167 2.288 ...

## $ age : num 50 31 32 21 33 30 26 29 53 54 ...

## $ diabetes: Factor w/ 2 levels "neg","pos": 2 1 2 1 2 1 2 1 2 2 ...

## $ binary : num 1 0 1 0 1 0 1 0 1 1 ...rows <- createDataPartition(df$binary,times=1, p=.7,list=FALSE)

# remove diabetes variable since it's collinear with binary variable.

df<-df[,-9]

names(df)## [1] "pregnant" "glucose" "pressure" "triceps" "insulin" "mass" "pedigree"

## [8] "age" "binary"train <- df[rows,]

test <- df[-rows,]

dim(train)## [1] 538 9dim(test)## [1] 230 9random forest so response variable is a factor. “ranger” is random forest in caret.

model <- train(as.factor(binary) ~ .,

data = train,

method = "ranger",

trControl = trainControl(method = "repeatedcv", number = 2, repeats = 2))model## Random Forest

##

## 538 samples

## 8 predictor

## 2 classes: '0', '1'

##

## No pre-processing

## Resampling: Cross-Validated (2 fold, repeated 2 times)

## Summary of sample sizes: 270, 268, 269, 269

## Resampling results across tuning parameters:

##

## mtry splitrule Accuracy Kappa

## 2 gini 0.7565073 0.4310475

## 2 extratrees 0.7667340 0.4362330

## 5 gini 0.7528175 0.4206111

## 5 extratrees 0.7630199 0.4424178

## 8 gini 0.7463222 0.4103567

## 8 extratrees 0.7565419 0.4292145

##

## Tuning parameter 'min.node.size' was held constant at a value of 1

## Accuracy was used to select the optimal model using the largest value.

## The final values used for the model were mtry = 2, splitrule = extratrees

## and min.node.size = 1.pred_train <- predict(model, train)

pred_test <- predict(model, test)

pred_train## [1] 1 1 0 1 1 0 1 0 1 1 1 1 0 0 1 0 0 0 0 1 0 0 0 1 0 0 0 1 0 0 0 0 0 1 0 0 1

## [38] 0 0 0 0 1 1 0 0 0 1 0 0 0 1 0 0 0 0 1 0 0 1 0 0 0 1 0 0 0 1 1 0 0 0 0 0 1

## [75] 0 0 1 1 1 0 0 1 0 0 1 0 1 1 1 1 0 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 1 0 0 0

## [112] 1 1 0 0 0 0 1 1 0 1 0 0 0 0 0 0 1 1 0 0 1 0 1 0 1 1 0 0 0 0 0 1 1 0 0 1 1

## [149] 1 0 1 1 1 1 0 0 0 0 1 0 0 1 0 0 1 1 1 1 0 0 0 1 1 1 0 0 0 0 0 1 1 0 0 1 0

## [186] 0 1 0 1 0 0 1 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 1 1 1 0 0 1 0 1 1 1 0 1 0 0 1

## [223] 0 1 0 1 0 1 0 1 0 1 1 0 0 1 0 1 0 0 1 0 0 0 0 0 0 0 1 0 0 1 0 1 1 0 1 0 0

## [260] 1 1 0 0 0 0 1 0 0 0 0 0 0 0 0 1 1 0 0 0 0 1 0 1 0 1 1 0 1 1 1 0 0 1 0 0 0

## [297] 0 1 1 0 1 0 1 0 0 0 1 0 0 0 0 1 0 0 1 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 0

## [334] 0 0 0 1 0 0 0 0 0 1 0 0 0 1 0 0 0 0 0 1 0 0 0 1 0 0 0 0 1 0 0 0 0 0 0 0 0

## [371] 0 0 0 0 1 0 0 1 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 1 0 0 0 0 0 1

## [408] 0 1 1 0 0 0 1 0 1 1 0 0 0 1 0 0 0 1 0 1 0 1 1 0 0 0 0 0 0 1 0 0 1 0 0 0 0

## [445] 1 0 0 0 1 1 1 0 0 0 1 0 0 0 1 0 1 1 0 1 1 0 0 0 0 0 1 1 0 1 0 0 1 0 0 0 0

## [482] 0 1 0 0 1 1 1 0 0 0 0 1 1 0 1 1 0 1 0 1 0 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 1

## [519] 1 0 0 1 0 0 1 0 1 1 0 0 1 1 0 1 1 0 0 0

## Levels: 0 1#create confusion matrix

confusionMatrix(pred_train, as.factor(train$binary))## Confusion Matrix and Statistics

##

## Reference

## Prediction 0 1

## 0 357 0

## 1 0 181

##

## Accuracy : 1

## 95% CI : (0.9932, 1)

## No Information Rate : 0.6636

## P-Value [Acc > NIR] : < 2.2e-16

##

## Kappa : 1

##

## Mcnemar's Test P-Value : NA

##

## Sensitivity : 1.0000

## Specificity : 1.0000

## Pos Pred Value : 1.0000

## Neg Pred Value : 1.0000

## Prevalence : 0.6636

## Detection Rate : 0.6636

## Detection Prevalence : 0.6636

## Balanced Accuracy : 1.0000

##

## 'Positive' Class : 0

## confusionMatrix(pred_test, as.factor(test$binary))## Confusion Matrix and Statistics

##

## Reference

## Prediction 0 1

## 0 126 40

## 1 17 47

##

## Accuracy : 0.7522

## 95% CI : (0.6912, 0.8066)

## No Information Rate : 0.6217

## P-Value [Acc > NIR] : 1.848e-05

##

## Kappa : 0.4444

##

## Mcnemar's Test P-Value : 0.003569

##

## Sensitivity : 0.8811

## Specificity : 0.5402

## Pos Pred Value : 0.7590

## Neg Pred Value : 0.7344

## Prevalence : 0.6217

## Detection Rate : 0.5478

## Detection Prevalence : 0.7217

## Balanced Accuracy : 0.7107

##

## 'Positive' Class : 0

## So accuracy isn’t terrible, being able to predict around 74% accuracy in the test set.

That’s the end of the course. It’s quite short and didn’t have much technical explanation of what’s happening, just a guided homework assignment basically.

Let’s see if we can improve upon the results by trying a different algorithm or by normalizing the variables.

Let’s try a neural net.

model2## Neural Network

##

## 538 samples

## 3 predictor

## 2 classes: '0', '1'

##

## No pre-processing

## Resampling: Bootstrapped (25 reps)

## Summary of sample sizes: 538, 538, 538, 538, 538, 538, ...

## Resampling results across tuning parameters:

##

## size decay Accuracy Kappa

## 1 0e+00 0.6905224 0.1395005

## 1 1e-04 0.6838481 0.1074889

## 1 1e-01 0.7127553 0.2581997

## 3 0e+00 0.7024345 0.2081525

## 3 1e-04 0.7071372 0.2044685

## 3 1e-01 0.7475514 0.3958300

## 5 0e+00 0.7115442 0.2712045

## 5 1e-04 0.7264637 0.3306077

## 5 1e-01 0.7479389 0.3970195

##

## Accuracy was used to select the optimal model using the largest value.

## The final values used for the model were size = 5 and decay = 0.1.pred_train <- predict(model2, train)

pred_test <- predict(model2, test)

pred_train## [1] 0 1 0 1 1 0 1 1 1 1 0 0 0 0 1 0 1 0 0 1 0 0 0 0 1 1 0 1 1 0 0 0 0 1 1 0 1

## [38] 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0

## [75] 0 0 1 1 0 0 0 1 0 0 0 0 0 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 1 0 0 0

## [112] 0 0 1 0 0 0 0 1 0 1 0 0 0 0 0 0 1 0 0 0 1 0 0 0 0 1 0 0 0 0 0 1 1 0 1 0 0

## [149] 0 0 0 0 1 0 1 1 0 0 1 1 0 1 0 0 1 1 1 1 0 0 0 1 0 1 1 0 0 0 0 0 0 0 0 1 0

## [186] 0 0 0 1 0 0 0 0 0 0 0 0 0 0 1 0 0 1 0 0 0 0 0 0 0 1 1 0 0 0 1 1 0 0 0 0 1

## [223] 0 0 0 1 0 0 0 1 0 0 1 0 1 0 0 1 0 1 1 0 0 0 0 1 1 0 0 0 0 0 0 1 1 0 0 0 0

## [260] 0 1 0 0 0 0 1 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 1 0 0 0 1 1 0 0 0 0 0 0

## [297] 0 1 1 0 1 0 0 0 0 0 1 1 0 0 0 1 0 0 0 0 0 0 1 1 0 0 0 0 1 1 0 0 0 1 0 0 0

## [334] 0 0 0 0 0 1 0 0 0 0 0 1 0 1 1 0 0 0 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0

## [371] 0 0 0 0 1 0 0 0 0 0 1 0 1 1 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 0 0 0 0

## [408] 1 1 0 0 0 0 1 0 0 1 0 0 0 1 0 0 0 1 0 1 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0

## [445] 1 0 0 1 1 1 1 0 0 0 1 0 0 0 0 1 1 1 0 0 0 0 1 0 0 0 1 1 0 0 0 0 1 0 1 0 0

## [482] 0 0 0 0 1 1 1 0 0 0 0 0 1 0 1 0 0 1 0 1 0 0 0 0 1 0 0 0 1 0 0 0 0 0 0 0 0

## [519] 0 0 0 1 1 0 0 0 1 1 0 0 1 0 0 1 1 0 0 0

## Levels: 0 1#create confusion matrix

confusionMatrix(pred_train, as.factor(train$binary))## Confusion Matrix and Statistics

##

## Reference

## Prediction 0 1

## 0 320 80

## 1 37 101

##

## Accuracy : 0.7825

## 95% CI : (0.7452, 0.8167)

## No Information Rate : 0.6636

## P-Value [Acc > NIR] : 9.511e-10

##

## Kappa : 0.4826

##

## Mcnemar's Test P-Value : 0.0001032

##

## Sensitivity : 0.8964

## Specificity : 0.5580

## Pos Pred Value : 0.8000

## Neg Pred Value : 0.7319

## Prevalence : 0.6636

## Detection Rate : 0.5948

## Detection Prevalence : 0.7435

## Balanced Accuracy : 0.7272

##

## 'Positive' Class : 0

## confusionMatrix(pred_test, as.factor(test$binary))## Confusion Matrix and Statistics

##

## Reference

## Prediction 0 1

## 0 123 43

## 1 20 44

##

## Accuracy : 0.7261

## 95% CI : (0.6636, 0.7826)

## No Information Rate : 0.6217

## P-Value [Acc > NIR] : 0.0005554

##

## Kappa : 0.3859

##

## Mcnemar's Test P-Value : 0.0055758

##

## Sensitivity : 0.8601

## Specificity : 0.5057

## Pos Pred Value : 0.7410

## Neg Pred Value : 0.6875

## Prevalence : 0.6217

## Detection Rate : 0.5348

## Detection Prevalence : 0.7217

## Balanced Accuracy : 0.6829

##

## 'Positive' Class : 0

## Normalizing variables using the scale function.

model3## Neural Network

##

## 538 samples

## 3 predictor

## 2 classes: '0', '1'

##

## No pre-processing

## Resampling: Bootstrapped (25 reps)

## Summary of sample sizes: 538, 538, 538, 538, 538, 538, ...

## Resampling results across tuning parameters:

##

## size decay Accuracy Kappa

## 1 0e+00 0.7612794 0.4413553

## 1 1e-04 0.7560655 0.4365547

## 1 1e-01 0.7608518 0.4435551

## 3 0e+00 0.7416130 0.3814423

## 3 1e-04 0.7356508 0.3852349

## 3 1e-01 0.7563886 0.4278060

## 5 0e+00 0.7235114 0.3548547

## 5 1e-04 0.7212702 0.3504395

## 5 1e-01 0.7478411 0.4079670

##

## Accuracy was used to select the optimal model using the largest value.

## The final values used for the model were size = 1 and decay = 0.pred_train <- predict(model3, train)

pred_test <- predict(model3, test)

pred_train## [1] 1 1 0 0 1 0 1 1 1 1 0 0 0 0 1 0 1 0 0 1 0 0 0 0 1 0 0 1 1 0 0 0 0 1 1 0 1

## [38] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 1 0 0 0 0 0 0

## [75] 0 0 1 0 0 0 0 1 0 0 0 0 0 1 0 1 0 0 0 0 0 0 0 0 0 1 0 0 0 1 0 0 0 1 1 0 0

## [112] 0 0 1 0 0 0 0 0 0 1 0 1 0 0 0 0 1 0 0 0 1 0 1 0 0 1 0 0 0 0 0 1 1 0 1 0 0

## [149] 0 0 0 0 1 1 1 1 0 0 1 1 0 0 0 0 1 1 1 1 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 1 0

## [186] 0 0 0 1 0 0 0 0 0 0 0 0 0 0 1 0 0 1 1 0 0 0 0 0 0 1 1 0 0 0 1 0 0 0 1 0 1

## [223] 0 0 0 1 0 0 0 1 0 0 1 0 1 0 0 1 0 1 1 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 1 1 0

## [260] 0 1 0 0 0 0 1 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 1 0 0 0 1 1 0 0 0 0 0 0

## [297] 0 1 1 0 1 0 0 0 0 0 1 1 1 0 0 1 0 0 0 0 0 0 1 1 0 0 0 0 1 1 0 0 0 0 0 0 0

## [334] 0 0 0 0 0 1 0 0 0 0 0 1 0 1 1 0 0 0 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0

## [371] 0 0 0 0 1 0 0 0 0 0 1 0 1 1 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 0 0 0 0

## [408] 1 1 0 0 1 0 1 0 0 1 0 0 0 1 0 0 0 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

## [445] 1 0 0 1 1 1 1 0 0 0 1 0 0 0 0 1 1 1 0 0 0 0 1 0 0 0 1 1 0 0 0 0 1 0 0 0 0

## [482] 0 0 0 0 0 1 1 0 0 0 0 0 1 0 1 0 0 1 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0

## [519] 0 0 0 1 1 0 0 0 1 1 0 0 1 0 0 1 1 0 0 0

## Levels: 0 1#create confusion matrix

confusionMatrix(pred_train, as.factor(train$binary))## Confusion Matrix and Statistics

##

## Reference

## Prediction 0 1

## 0 316 87

## 1 41 94

##

## Accuracy : 0.7621

## 95% CI : (0.7238, 0.7975)

## No Information Rate : 0.6636

## P-Value [Acc > NIR] : 4.188e-07

##

## Kappa : 0.4315

##

## Mcnemar's Test P-Value : 6.965e-05

##

## Sensitivity : 0.8852

## Specificity : 0.5193

## Pos Pred Value : 0.7841

## Neg Pred Value : 0.6963

## Prevalence : 0.6636

## Detection Rate : 0.5874

## Detection Prevalence : 0.7491

## Balanced Accuracy : 0.7022

##

## 'Positive' Class : 0

## confusionMatrix(pred_test, as.factor(test$binary))## Confusion Matrix and Statistics

##

## Reference

## Prediction 0 1

## 0 126 44

## 1 17 43

##

## Accuracy : 0.7348

## 95% CI : (0.6728, 0.7906)

## No Information Rate : 0.6217

## P-Value [Acc > NIR] : 0.0001946

##

## Kappa : 0.3997

##

## Mcnemar's Test P-Value : 0.0008717

##

## Sensitivity : 0.8811

## Specificity : 0.4943

## Pos Pred Value : 0.7412

## Neg Pred Value : 0.7167

## Prevalence : 0.6217

## Detection Rate : 0.5478

## Detection Prevalence : 0.7391

## Balanced Accuracy : 0.6877

##

## 'Positive' Class : 0

##

predicting diabetes using R Carets package